Using information technology, important data may be retrieved in the most efficient manner, which is vital in today’s competitive market. The data warehouse has become more crucial in businesses to store information about a company’s daily operations in databases.

This knowledge and data are useful. As a result of the decision-making that takes place throughout the process, it may be vital to the success of companies. That’s why it’s so critical to know what a data warehouse is.

I’ve compiled a list of often-asked questions to aid you in your job interview. This article is divided into:

- Basic Data Warehouse Interview Questions

- Intermediate Data Warehouse Interview Questions

- Advanced Data Warehouse Interview Questions

Looking to hire the best remote developers? Explore HireAI to see how you can:

⚡️ Get instant candidate matches without searching

⚡️ Identify top applicants from our network of 300,000+ devs with no manual screening

⚡️ Hire 4x faster with vetted candidates (qualified and interview-ready)

Try HireAI and hire top developers now →

Basic Data Warehouse Interview Questions

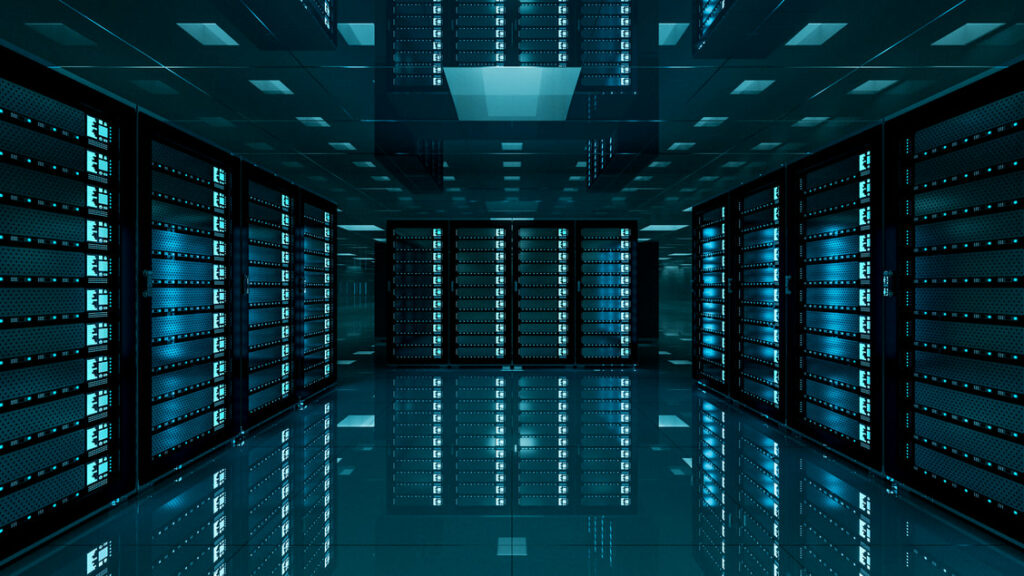

1. What is a data warehouse?

If you’re looking for a way to keep track of a bank’s activity records, for example, a data warehouse is a place to go. In the 1980s, it emerged as a notion to inspire corporate data systems from academic institutions. This approach has developed over time and may now be found in various BI systems.

The data warehouse makes it possible to organize and have a data warehouse, making it simpler to develop reports and analyze vast volumes of data and get insights, which may be crucial in the decision-making process of a firm. The system uses data mining to integrate information from many systems scattered across an organization. As a result, queries, reports, and analyses for decision-making are arranged in many tiers.

2. What’s the difference between a data warehouse and data mining?

The difference between data warehouse and data mining is a basic difference to understand. The candidate should be able to explain each with ease when asked by the interviewer.

What is data mining?

Data mining is a technique for uncovering information that you didn’t know you had. You can only obtain known information from the data using the standard query method. Data mining, on the other hand, gives you the ability to uncover previously unknown information. Through the process of data mining, it is possible to get useful information from databases.

Known as KDD, knowledge discovery in databases shows patterns and relationships. An object’s characteristics may also form a connection with another object. One of the results of data mining is called a pattern because it shows a regular and easy-to-follow sequence of data.

For the sake of a quick recap, the first stage in data mining on a large dataset is called “selection.” Remove inconsistencies from the data before going on to preprocessing. Next, the data is translated into a format that can be used for data mining. In the next step, data mining techniques are used to analyze the data. Finally, the process of interpreting and evaluating data includes identifying patterns or relationships among the information.

What is a data warehouse?

Information gathered from diverse sources and kept in a single, uniform structure may be found at the Data Warehouse, a central place. Data is first obtained, then cleansed, converted, and stored in a data warehouse from a variety of corporate sources. It is possible to access data in a data warehouse for an extended length of time after it is first stored there.

Data modeling, data collecting, data administration, metadata management, and development tool store management are all part of the data warehouse. These technologies can help with data extraction, data transformation, data warehousing, and user interfaces, as well as many other things.

Main difference

In order to distinguish between data mining and data warehousing, one must first perform data extraction, the process by which useful information is gleaned from a large database or warehouse. As a result of this, data mining can be done more quickly and effectively with the help of a data warehouse, which houses all the data in one place.

Read More: 8 Common Interview Mistakes Remote Software Developers Make

3. Data warehouses provide what advantages?

A data warehouse (DW), like any business tool, has its advantages and disadvantages compared to other alternatives. The candidate must demonstrate this knowledge, as well as be able to understand at what point his company will need each solution in case the interviewer questions him.

For those who wish to implement business intelligence initiatives, a DW is a necessary tool. However, it also has additional benefits. Here are a few examples:

- Data centralization: When a corporation relies on a DW, all of its data is housed in a single location. Data can be found more rapidly in this manner, and data discrepancies may be eliminated.

The organization also faces the danger of missing out on all the data that is being created all the time without this technology. - Analysis made easier: Data warehouses also make the process of evaluating databases simpler since they give a larger picture of the information set. This avoids errors and gives data that may be used to identify patterns and make strategic decisions.

- Simplified access: Performing this data analysis often necessitates the involvement of many members of the company’s staff. As a result, it is critical that access be made as simple as possible. Allowing quick access to authorized individuals is another benefit of DW.

- Reporting: Another benefit is that reports may be generated without the assistance of programmers with specific knowledge. Because Data Warehouse products help non-technical users manipulate and produce consistent results, the workflow is made easier by this fact.

4. What is an aggregate table?

Aggregation is the collection and presentation in summary form of data for statistical analysis and the achievement of commercial goals. If your candidate wants a complete picture of your company’s operations, they need to be able to look through huge amounts of raw data.

Your candidate should also be familiar with the term “data aggregation.” In the context of statistical analysis, data aggregation refers to the collection and presentation of data in a summary form. The most common reason for data aggregation is to learn more about certain demographics, such as age, occupation, or income.

What are the advantages of constructing aggregate or roll-up tables in this regard?

For the queries you still have, you may pre-aggregate your older data using rollup tables. You may then discard or roll off older data to slower storage, saving space and computational resources. You no longer need to keep all of the older data.

Read More: 8 Behavioral Interview Questions Asked by Top Tech Companies

5. What do you understand about Star Schema?

A clear understanding of the Star Schema is essential in developing a data warehouse, as it involves building complex databases as well. The candidate should elaborate on the advantages and disadvantages of this approach.

As part of his vision for database modeling for decision-support systems, Dr. Ralph Kimball came up with the Star Schema idea. Performance is boosted due to the availability of a lot of duplicated data.

A data warehouse’s star schema is one of many data modeling approaches.

Dimensional tables are connected to fact tables to model the data. For each event, the dimensional tables keep track of its specifics. Events that have happened and their accompanying dimensional table attributes are stored in the fact table.

The name was chosen because of the model’s resemblance to a celestial body. The fact table sits in the “heart” of the star, surrounded by auxiliary tables referred to as dimensions. Multiple joins link the fact table to the other dimensions, but just one join connects the dimension tables to the fact table.

Properties

- an all-in-one fact table that contains all of the relevant information;

- each dimension has its own table;

- only one primary key exists for each dimension in the fact table;

- each key is generated.

An individual, highly-denormalized table may be represented by each dimensionality.

Advantages

Many people like this because it is easy to understand, has fewer joins, and needs less upkeep.

Disadvantages

There is a lot of redundancy in dimension tables since they are not standardized. A simple change, like the name of a country, might require a lot of database changes, making it unsuitable for transactions (for all the rows of municipalities).

6. Explain what dimension tables and fact tables are.

Fact and dimension tables are extremely important components when building a data warehouse. Knowing what is recommended and what to avoid is also a good idea when the interviewer asks the candidate.

When creating a data warehouse, fact and dimension tables are the two most important tables. Surrogate keys connect the fact table to the dimension tables, which provide measurements for the columns.

Measure columns are the data that you maintain in order to measure business facts. Measure columns in a data warehouse could include things like sales revenue or shipment volume. The dimension characteristics are used to examine these metrics. Because of this, a fact table should have surrogate keys for dimension tables.

Text columns should be avoided in fact tables. With regard to fact tables, for example, status columns are common. Ultimately, this method will result in many status columns in the fact table. Data warehouse designers might do better by moving all status information into a “junk-dimension” and using another surrogate key to link combinations with fact tables.

However, there may be instances where columns like “Order number” must be stored in the fact tables. For debugging reasons, these columns should be placed in the fact table, rather than the audit table.

Read More: 10+ Tips for Preparing for a Remote Software Developer Zoom Interview

Check out our entire set of software development interview questions to help you hire the best developers you possibly can.

If you’re a developer, familiarize yourself with the non-technical interview questions commonly asked in the first round by HR recruiters and the questions to ask your interviewer!

Arc is the radically different remote job search platform for developers where companies apply to you. We’ll feature you to great global startups and tech companies hiring remotely so you can land a great remote job in 14 days. We make it easier than ever for software developers and engineers to find great remote jobs. Sign up today and get started.

Intermediate Data Warehouse Interview Questions

1. What are the advantages of having a cloud-based data warehouse?

Many companies have different architectures for storage solutions: a local data warehouse or a cloud-based data warehouse both have their advantages and disadvantages. The candidate should demonstrate knowledge in the decision process in choosing the most suitable tools.

With on-premises storage, analytics and business intelligence (BI) activities are less efficient than they are with cloud data warehouses. On-premises systems have the added drawback of having restricted scalability and higher upgrade expenses.

Using a legacy data warehouse, on the other hand, puts you at risk in a number of areas, including security and compliance. Cloud-based data warehousing, on the other hand, may be able to solve many of these problems for forward-thinking businesses.

Because of the increasing need for analytics, traditional data storage architectures can no longer meet these demands. A forecasted $3.5 billion market for cloud data warehousing proves that conventional data warehouses cannot give enterprises the speed, scalability, and agility they are searching for.

Data warehouses hosted in the cloud tend to be less time-consuming to set up than those hosted on-premises. Cloud-based data warehouses assure compliance and may be more secure than historical data warehouses since they were built with the ever-changing needs of enterprises in mind. Companies no longer have to choose between on-site and off-site storage options when they need to manage a lot of data. Cloud DWHs have changed that.

User-friendliness

Accessibility and adaptability are lauded as virtues in today’s workplaces. However, how true would these claims be if their data storage technology limited them to a single place?

Thanks to globalization, companies now have offices in several countries across numerous continents. With the rise of working from home, businesses need to set up a system that allows workers to get data and make important decisions no matter where they are.

Cloud data warehouses can be accessed from any location on the globe, but on-premise data warehouses can only be accessed from a single location. Access control measures in contemporary data warehouses make sure only the right people have access to the data required for business insight.

Because contemporary data warehouses don’t compromise on data quality for accessibility, firms don’t have to worry about their data quality being compromised when numerous workers use the warehouse simultaneously.

The ability to adapt and grow

Designed for the contemporary corporation, cloud data warehouses are ideal. Cloud-based data warehouses enable enterprises to dynamically allocate resources based on changing business needs.

As an example, a tourist company may need greater computer power for better analytics during the peak season, but just a fraction of that processing capacity during the off-season. On-premises data warehouses do not allow firms to pay just for the resources and capabilities they need, which is impossible with a cloud DWH.

Increased efficiency

In most cloud data warehouses, the workload is distributed across many servers. Large volumes of data may be handled at the same time on these servers without any interruptions.

Organizational data is typically handled in a variety of ways by various departments. This data may be used by the marketing department, for example, in order to get insight into which marketing channels are more effective with certain demographics. Instead, the customer support staff would need data from various touchpoints to determine where the most complaints and comments have been made.

In a modern cloud-based data warehouse, all of an organization’s departments can access important data and make decisions that are based on facts. This can improve productivity. In addition to those stated, there are several more, such as increased data storage, expanded integration, and improved disaster recovery, that should be added to the list.

2. Explain OLAP in the context of a data warehouse.

OLAP is a very interesting analytical solution, even for business-oriented professionals. The candidate should explain the different forms of OLAP and how they are related.

Business analysts, managers, and executives can quickly and consistently use OLAP software to analyze and show data from their company. This software is called OLAP. Analytical and navigational operations can be done at the same time by people who use OLAP systems because they can look at an organization’s aggregated data in a variety of ways.

Denormalized databases are the standard for OLAP (Online Analytical Processing) systems. It is possible to find and show information using these tools, which are able to traverse across data warehouses.

OLAP Architectures

- ROLAP (Relational Online Analytical Processing): The query is sent to your relational database server while the cube is on your server.

- MOLAP (Multidimensional Online Analytical Processing): Processing done in a multidimensional server.

- HOLAP (Hybrid Online Analytical Processing): ROLAP and MOLAP are both used, which is a hybrid of the two.

Read More: Phone Screen Interview vs Actual Phone Interview: Learn the Differences

3. Can you explain the data warehouse architecture?

The architecture of software is extremely complex, but the candidate should know what are the essential components for building a functional data warehouse that meets his or her expectations. In addition, you may ask this to identify the candidate’s knowledge of the different types of possible data warehouse architectures.

The Architecture of a Single-Tier Data Storage Facility

When data is stored in a single-tier data warehouse, it is more densely packed, which minimizes the overall amount of data. This form of warehouse architecture, although advantageous for removing redundancies, is not ideal for organizations with complicated data needs and frequent data flows. When it comes to handling increasingly sophisticated data flows, layered data warehouse designs come into play.

The Architecture of a Data Warehouse with Two Levels

Two-tier data warehouse models, on the other hand, isolate data sources from the warehouse. With the two-tier architecture, one system and one database server are used instead of two. The two-tier architecture is most often utilized by small firms with a single server acting as a data warehouse. Because of its two-tier design, the two-tier building cannot be scaled. The number of people who can utilize it is also limited to a small number.

Data Warehouse Design using a Three-Tier Structure

When you have a three-tier data warehouse architecture, data moves from raw data to important insights in an orderly way. Sometimes, the database server, which makes sense of data from many sources, like transactional databases used by front-end users, is at the bottom of the data warehouse paradigm.

It’s important to have an OLAP server in the intermediate layer. This layer reorganizes the data in a way that makes it easier for the user to conduct a wide variety of analyses and investigations. In its design, it already has a built-in OLAP server that can be used to analyze data.

The tools and API used for high-level data analysis, querying, and reporting are included in the third and topmost tier, the client. People tend to overlook the fourth layer of the data warehouse architecture since it isn’t as critical to the overall design as the other three layers.

The Architecture of a Data Warehouse

- The Data Source: An enterprise’s transactional systems might be made up of a variety of data types.

- Data Stage: A storage space and a series of operations make up the structure. As the name implies, it’s responsible for extracting data from transactional systems and then doing various data preparation tasks before it can be loaded into the data warehouse. The end user does not see any of this information.

- Presentation Server: Environment in which data may be accessed by end users. OLAP technology (Online Analytical Processing) may also be used to store data on these servers, as many data marts only function with data in dimensional form.

- Data Mart: Here, the data warehouse is broken down into groups based on the departments or views that users need.

- Data Mining: This technique analyzes enormous amounts of data in order to find hidden patterns and relationships. An automated scanning tool is needed to look for trends and patterns based on predefined criteria, which aren’t likely to be found by a simple search in data warehouses, so it’s important to have one.

- Data Warehouse Reporting Layer: Access to the BI interface or BI database architecture is provided via the reporting layer in the warehouse. The reporting layer of a data warehouse is like a dashboard for looking at data, making reports, and getting all the important information.

4. In the context of data warehousing, what is data purging?

Data purging is a central term in the life cycle of a data warehouse. The candidate should demonstrate knowledge of the term, and demonstrate their process for deciding when data needs to be purged.

When no longer needed, outdated data may be permanently removed from a storage place via data cleansing. Data purges are often based on the age of the data or the nature of the data. An archive is a copy of deleted material that has been preserved in a different storage place.

Data may be deleted from the main storage site, but it can be recovered and restored from an archive copy in the event of a future necessity. Data may be permanently deleted from a storage place, but the delete operation doesn’t preserve a backup.

Read More: How to Write a Great Thank-You Email After an Interview

5. What is the relationship between data warehousing and business intelligence?

This is a very important question. Many people perceive a data warehouse resource as a resource that is only interesting to a data engineer, or other technical position that is responsible for managing this architecture. However, the candidate must identify the role of the business intelligence professional in analyzing the data available in a data warehouse.

Business intelligence (BI) and advanced analytics are two of the primary functions of a data warehouse, which is a sort of data management system. For sophisticated query and analytical reasons, data warehouses are often built to hold a huge quantity of historical data. They get data from application log files and apps that do business.

A data warehouse is a place where enormous volumes of data from many sources may be gathered and consolidated. Organizations may utilize their analytical skills to get valuable business insights from their data and make better decisions. Data scientists and business analysts may greatly benefit from the accumulation of historical records. A data warehouse might be regarded as the “one true source” of an organization because of these features.

Advanced Data Warehouse Interview Questions

1. Data warehousing requires a certain set of abilities from an IT expert. Can you cite them?

In a business environment, the data warehouse interests more than one type of professional. The candidate should have each utility well defined when you ask them this question. Precisely because of the ways in which a data engineer and a data analyst differ when it comes to using the resources of a data warehouse, you’ll be interested in the candidate’s answer.

Depending on the job they’re applying for, they’ll need a different set of talents. Data warehousing interviews may be conducted for a variety of positions. Data warehousing can lead to a wide range of jobs, each of which requires a certain set of skills.

The responsibilities of business intelligence

In this category are positions like business intelligence expert and business intelligence analyst. To succeed in this position, candidates should be able to think critically, analyze data, solve problems, and communicate effectively, as well as understand the sector. There are several responsibilities that fall within the purview of a business intelligence specialist.

Positions in the field of data analysis

Data analysts, data scientists, and data architects are all possible job titles for someone working in this field. These experts are very knowledgeable. Mathematical aptitude, data analytic abilities, expertise with data modeling, familiarity with programming languages like SQL, a focus on deadlines, and the ability to work effectively in a group are all desirable qualities. Those who work in the IT department and may also be developers are included in this group.

The responsibilities of software developers

Depending on their qualifications and experience, a software developer may find himself in an interview for a data warehousing position. For example, anybody who needs to write SQL code for a database would benefit from being familiar with the language. Specific credentials, logical thinking, attention to detail, as well as knowledge of backend infrastructure are all examples of desirable traits among job candidates.

2. What is the Chameleon Method?

The chameleon method is a very important concept in the data warehouse context, precisely because its main use is to work on the disadvantages that some components of a data warehouse may have. The interviewer will be interested in a simple and straightforward explanation of the chameleon method in the context of data warehousing.

In data warehousing, traditional models and approaches have limitations. Chameleon is a hierarchical clustering algorithm that addresses these drawbacks. Data items are represented by nodes and weights by edges in a sparse graph that is used for this algorithm’s implementation. The ability to produce and work on big data sets is made possible by its representation.

The two-phase methodology is used to locate the clusters in the data set:

- Graph partitioning is the initial step, which allows for the creation of a large number of subclusters.

- Agglomerative hierarchical clustering is used in the second phase of the analysis to find real clusters that can be joined with the sub-clusters that are generated.

Read More: 15+ Most Popular Programming Languages & Technologies to Know

3. Is there a standard way to build a data warehouse?

There are several processes for building a data warehouse. Many processes, however, have commonalities among them. The candidate should address these commonalities and address the advantages of this cycle when asked by the interviewer.

Before anything else, we need to have a clear picture of what the user is looking for. We begin by comparing the dimensions and facts required to meet the needs of the management. First, we focus on the DW’s content, not its implementation, so we don’t have to worry about the data’s actual presence.

- Data Mapping: Identifying the data’s origins and determining the best path to them is accomplished via a process known as data mapping. By examining the availability of relevant data, we may now check whether or not the desires outlined in the previous stage can be realized.

- Staging Area Construction: Following mapping, we create a structure called the Staging Area, which serves as a transition area for DW data. and Dimensions tables get the necessary treatment for future loads in this section, where data is transferred and isolated from the operational systems (OLTP).

- Dimensions Constructions: At this stage, the framework for the dimensions in the DW is put together. In the dimensions, we additionally specify the degree of historicity to be assigned to the data.

- Facts Constructions: In this stage (after the creation of the Dimensions), we create the Fact’s underlying structure (s). This section evaluates and specifies the level of detail to be stored in each fact. Growth and storage capacity are also assessed as part of this process.

- Definition of the General Loading Process: After finishing the preceding phases, we need to build the engine that will allow everything to be loaded, updated, coordinated, and processed in an orderly manner. As a result, the DW’s “brain” is the overall loading process.

- Metadata Creation: In order to complete the metadata documentation, we must first create the build process and the data dictionary. Knowledge management relies heavily on metadata.

Remember that the Data Mart is partitioning the DW into subsets of information structured by certain topics. There should be no need to repeat this process unless there is a necessity to do so (which should be done just once, if possible).

Because of their end-to-start reliance, it’s critical that you follow the instructions exactly as they’re listed. To put it another way, once the previous phase is done, then the next step may begin.

Finally, if each of these actions is given the required attention and is completed successfully, the odds of success in the DW building project are almost certain. As a result, we’ll have a central location where we can keep data that’ll be useful to the company when making decisions.

4. The ETL cycle has a three-tiered design. Can you please explain them?

ETL is part of the life cycle of a data warehouse. The candidate should demonstrate mastery by explaining its three phases to the interviewer.

- Extraction

SQL data extraction is the focus of the process’s initial step. A preliminary examination of the data may be done in a transition region at this point. At this point, we have a single format for all of the data that can be manipulated in subsequent phases. First, the data must be significantly standardized since it is so distinct from one another.

- Transformation

The information that was extracted and standardized in the extraction step is adapted in the transformation stage. We do sanitization here, which is the process of transforming data. The aim is to provide just the information that may be successfully used to the management for his or her consideration.

Creating filters for future analysis such as age, location, time, position and hierarchical level may also be done at this stage.

- MOLAP

The third and last phase of the procedure is loading the previously arranged data into a new database. In either a corporate or a departmental setting, this may occur (data mart). After completing the previous step, we repeat the process and fix any additional irregularities in the information flow.

It is feasible to establish a mapping of all patterns by retaining an ordered data model, which makes them available for future usage.

Consider that ETL is not necessarily conducted in a single data processing environment. Any cloud-based or on-premises program may be used to do this task. The data mining process may also be used in a more advanced level and with the job done, so that it is possible to develop and detect new patterns of user, buyer, or supplier behavior.

ETL is necessary for creating and observing data-related dimension structures and facts in a data warehouse environment. DWs are designed to house data that must be acted upon at some time. As we’ve seen in the last section, here is where the Extraction, Transformation, and Load (ETL) operations come into play.

As a result, ETL acts as a backup for data stored in a data warehouse but not yet accessed by users.

Read More: How to Show Off Your Soft Skills at Software Development Interviews

5. Why would you need a real-time data warehouse?

The candidate must demonstrate knowledge of the advantages and disadvantages of applying an RTDW. You’ll be interested to know the differentiating aspects that drive business agents to adopt this solution.

RTDWs look and feel like typical data warehouses, but they are far quicker and more scalable than traditional data warehouses. You can have “small data” semantics and performance on a “big data” scale.

- Because of this, more data is coming into the warehouse more often.

- Data may be accessed instantly, with no need for processing, aggregation, or compaction.

- The pace at which searches are executed has increased: tiny, selected queries are measured in milliseconds, while big, scan- or compute-heavy queries are handled at very high bandwidth.

- Because there aren’t any big changes needed, changes can be made quickly if they need to be made.

Data warehousing has proved that although this may seem straightforward and even easy to some, it is anything but. It is extremely difficult to interact with a large volume of rapidly incoming data, some of which may need to be updated, and a large number of queries with wildly divergent patterns.

Conclusion

You may use these data warehouse interview questions to find the best applicant, whether you’re a professional preparing for an interview or a hiring manager.

Make sure you keep in mind that technical abilities and expertise are only one component of the recruiting process. In order to secure (or identify the ideal applicant for) the job, both past experience and soft skills are crucial.

You can also explore HireAI to skip the line and:

⚡️ Get instant candidate matches without searching

⚡️ Identify top applicants from our network of 250,000+ devs with no manual screening

⚡️ Hire 4x faster with vetted candidates (qualified and interview-ready)